Site Crawling Options

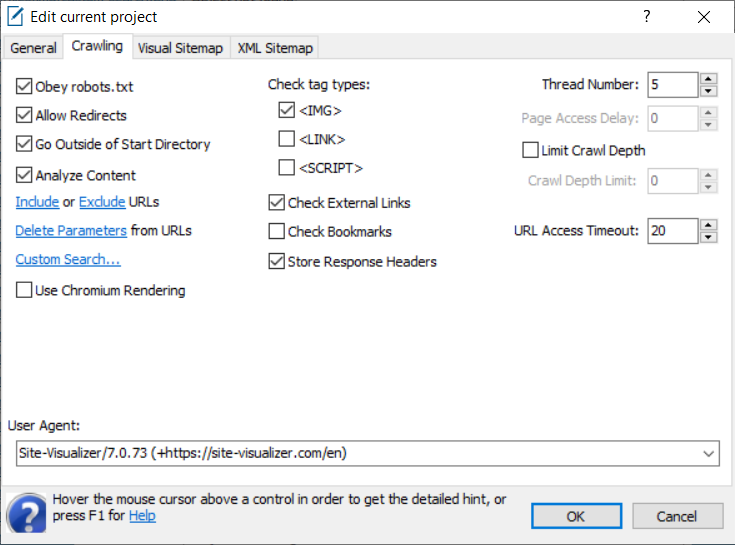

This tab of the project settings window contains options related to connection to a website's pages, its links and content analysis:

Obey robots.txt

This simple text file contains the rules for search bots and other site crawlers to follow. You can find more details about the robots.txt protocol specifications at the official site.

This option allows crawling a site's pages just like a search bot does when indexing the site. If the option is checked (it is checked by default), the Site Visualizer bot won't try to access any forbidden URLs. Otherwise, the program's bot will crawl all the links that will be found within an HTML text of a page.

Allow Redirects

Defines whether to access an URL a link redirects to.

Go Outside of Start Directory

If the option is checked, the whole website will be crawled. Uncheck this option if you need to crawl particular directory of a website only. In this case, specify the Site URL on General tab including the directory to crawl and a trailing slash, e.g. 'http://example.com/dir/'. Specifying the Site URL without trailing slash (e.g., 'http://example.com/page') means that you want to crawl the root directory of http://example.com/ only, omitting /dir/ and others directories.

Analyze Content

Enables count of words, sentences, syllables, complex words, and characters of a page content. Basing upon these values, the following readability scores are being counted (use reports of Content category to view them):

- Flesch Reading Ease

- Flesch-Kincaid Grade Level

- Gunning Fog

- SMOG

- Coleman-Liau

- Automated Readability

- Dale–Chall Readability

Include URLs

The feature allows to crawl certain URLs (or directories) only. This may be useful for extremely large websites crawling.

At the Crawling tab of the project settings window, click Include link. In the dialog box appears, type URLs (or part of a URL, such as directory, file name, etc.) that should be crawled – one per a line. Only links that contain at least one of the specified string in its URL address will be crawled.

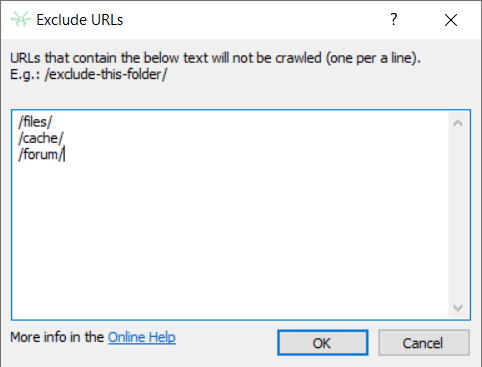

Exclude URLs

The feature allows to define a list of URLs or directories that should be skipped while crawling. The behaviour is in a similar to the above inclusion URLs process. Links that have at least one of the specified strings in its URL address will not be crawled:

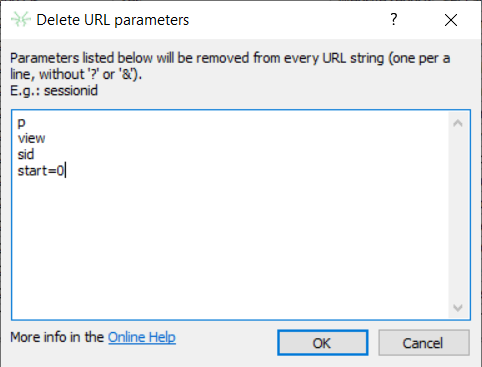

Delete Parameters from URLs

Opens the dialog window that allows to specify parameters to be deleted from a URL string. In most of cases such parameters can be session IDs, category IDs, various preview modes, and many others that make a URL different, but it still references to the same page of the website. Removing of such parameters makes the sitemap cleaner and smaller that in turn simplifies site analyzing. And at last, this allows to submit to Google and other search engines URLs without such "garbage" parameters.

Type parameters you want to exclude from URLs in the dialog window appears. A line should contain single parameter (without '?', '&', or any other delimiters). For instance, the picture below contains set of parameters for a phpBB forum crawling:

Click OK button to save the parameters list to current project.

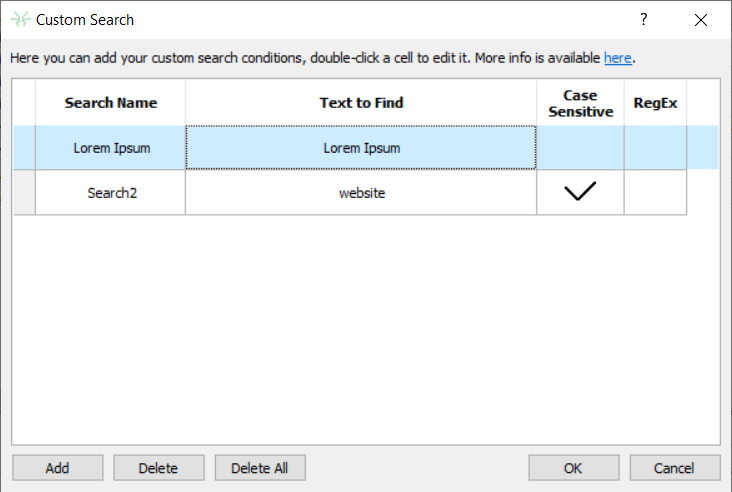

Custom Search

Intended for text search inside a page source code (including content, HTML tags, comments):

Click Add button to add new search condition, click Delete or Delete All in order to delete the current one or all conditions. Double-click a cell of the table to edit it: change search text, rename current search, check or uncheck Case Sensitive and RegEx columns. If the RegEx cell is checked, the application considers search text as a regular expression (PCRE). Click OK to save the changes.

Once the crawling is complete, run Custom Search report. It will contain all the URLs the search text (or regular expression) has been found at, as well as number of its occurrences.

Use Chromium Rendering

Some websites use client-side JavaScript. This means that the HTML source code (or a part of it) of a page is being created by JavaScript. But in order to execute this script, we need a program that can do that - a web browser. Only after that, we can get the source HTML code and parse it for links, headings, images, and other HTML tags.

Site Visualizer has embedded Chromium browser that allows to render website pages just like a Google Chrome browser: run client-side JavaScript, apply CSS styles, load additional resources, etc. The Use Chromium Rendering option is intended for crawling such JavaScript websites.

Crawl Secured Pages

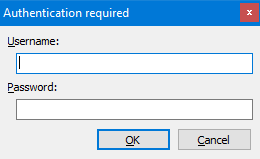

On access to protected area, the program will ask for your credentials:

In the dialog box appears, specify the username and password. If the credentials are correct, Site Visualizer will crawl the secured pages.

Check Tag Types

These parameters allow you to define which tags should be parsed and added to the Links table. This is useful when you need to exclude, for example, occurrences of links to images, CSS files, or JavaScript files:

- <IMG> – uncheck it if you need to exclude the <IMG> image tags from the site's HTML text parsing and prevent adding it to the Links table.

- <LINK> – these tags in most cases are used for linking from a page HTML text to one or more Cascading Style Sheets (*.CSS) files. Like the <IMG> tag, the <LINK> tag is just a reference to a file, not a hyperlink to a page of a website. It doesn't transfer any "page weight," so this option can be usually unchecked in case of SEO analysis.

- <SCRIPT> – this tag, just like <IMG> and <LINK>, is a reference to a JavaScript (*.JS) file. If you don't want to see such references in the Links table, keep this option unchecked.

Check External Links

Remain this option switched on to check links from a website you'll crawl to external sites. This allows you to find broken external links on your website.

Check Bookmarks

When turning this option on, links to the same page but with different bookmarks will be recognized as different as well. For instance, example.com/somepage#b1 and example.com/somepage#b2 would be recognized as links to two different pages, and would be added to the Pages table separately. Every of these bookmarks (#b1 and #b2) will be checked for presence on example.com/somepage. In case of fail, the corresponding Response column will contain #b1 Not Found message.

Store Response Headers

Check this option on (by default) in order to store headers that were received in response on access to a internal URL during the crawling.

Thread Number

This parameter defines the number of parallel threads for crawling a website. The maximum value is 25, and the default is 5.

Page Access Delay

This option is only available when the number of threads is decreased to 1, because only in this case the delay between URL requests has any sense. This feature allows you to avoid a possible ban by particular sites because of too frequent URL requests. A delay of 2 or 3 seconds should be enough.

Limit Crawl Depth

Allows restricting the depth of URLs crawling:

- 0 - only the main page of a site will be crawled, e.g. http://example.com.

- 1 - the main page and all pages of the first level will be crawled, e.g. http://example.com/page1, http://example.com/page2, etc.

- And so on up to 9, which is the maximum page level.

URL Access Timeout

The connection speed to various websites, as well as to various pages within one website, is usually different. This feature allows you to define the maximum number of seconds for an URL access. Decreasing this value will make crawling faster, since no connection to "slow" pages will occur in the timeout, and a "Read timed out" message will appear. If you are sure that the connection to some pages or to a site is slow and you're ready to wait, increase this value.

User Agent

This option allows you to crawl a site on behalf of a search bot: Google bot, Yahoo! Slurp, etc. Or you can enter your custom user-agent string. By default, the Site Visualizer user agent is used.

See Also: